Generative AI’s release to the world less than three years ago triggered a frenzy of new “products,” as well as predictions and criticisms of the technology. Over the past year, the term AI agents began appearing more frequently in the news.

The July 24, 2024 issue of the McKinsey Quarterly included an article written by Lareina Yee, Michael Chui, and Roger Roberts with Stephen Xu explaining “Why agents are the next frontier of generative AI.” The article opens with the statement:

We are beginning an evolution from knowledge-based, Gen AI-powered tools—say, chatbots that answer questions and generate content—to Gen AI-enabled ‘agents’ that use foundation models to execute complex, multistep workflows across a digital world.

The authors note that agentic systems “refer to digital systems that can independently interact in a dynamic world.” They also write that these systems have been around for a while but note that “the natural language capabilities of gen AI unveil new possibilities.” (WEB: I recall the success of Robotic Process Automation (RPA) software implementations, some of which were touted as machine learning/AI)

The McKinsey authors write that agentic systems have been difficult to implement, requiring laborious, rule-based programming or highly specific training of machine-learning models. According to them, Gen AI changes that. Agentic systems using Gen AI foundational models can respond to prompts on which they have not been trained.

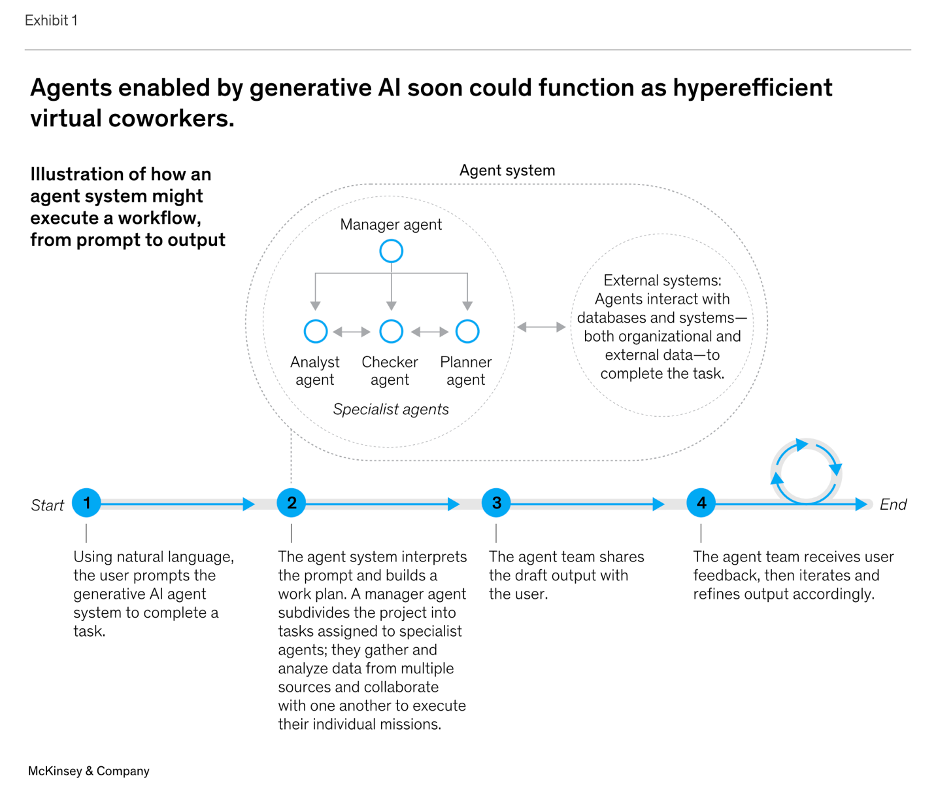

Furthermore, human users could direct a Gen AI-enabled agent system to accomplish a complex workflow. A system comprised of multiple agents could interpret and organize the newly created workflow into actionable tasks and flows assigned to specialized agents and then execute more complex tasks using other computer tools.

Yee, Chui, and Roberts write that the technology is still in “its nascent stage,” but in the past year, Google, Microsoft, OpenAI, and others have invested in software libraries and frameworks to support agentic functionality. The speed of the development could lead to agents “being as commonplace as chatbots are today.”

Value Is in Automation

Agents have the potential to automate many use cases characterized by highly variable inputs and outputs. However, many of these have been difficult to automate cost-effectively or in a timely manner according to the McKinsey team.

Booking a business trip is an example of many different itineraries, including different airlines and flights, different hotels, including those with the travelers’ preferred rewards programs, restaurant reservations, and other activities. While many of these activities have been automated, building the entire itinerary has usually been completed manually by the traveler or his/her executive assistant.

There are three ways that agents can simplify the automation of complex use cases:

- Agents can manage multiplicity.

- Agents can be directed with natural language.

- Agents can work with existing software tools and platforms.

The ability of agents to break down complex tasks into sub-tasks that can be executed with separate instruction sets and different data sources is here. The process follows four steps:

- User provides instruction.

- Agent system plans, allocates, and executes work.

- Agent system iteratively improves output.

- Agent executes action.

The authors provide an example of how this process works in Exhibit 1 below.

Three Use Cases Proposed

While the process illustrated in Exhibit 1 is highly theoretical, the authors provide three use cases regarding what could be possible once agent systems are available to be utilized.

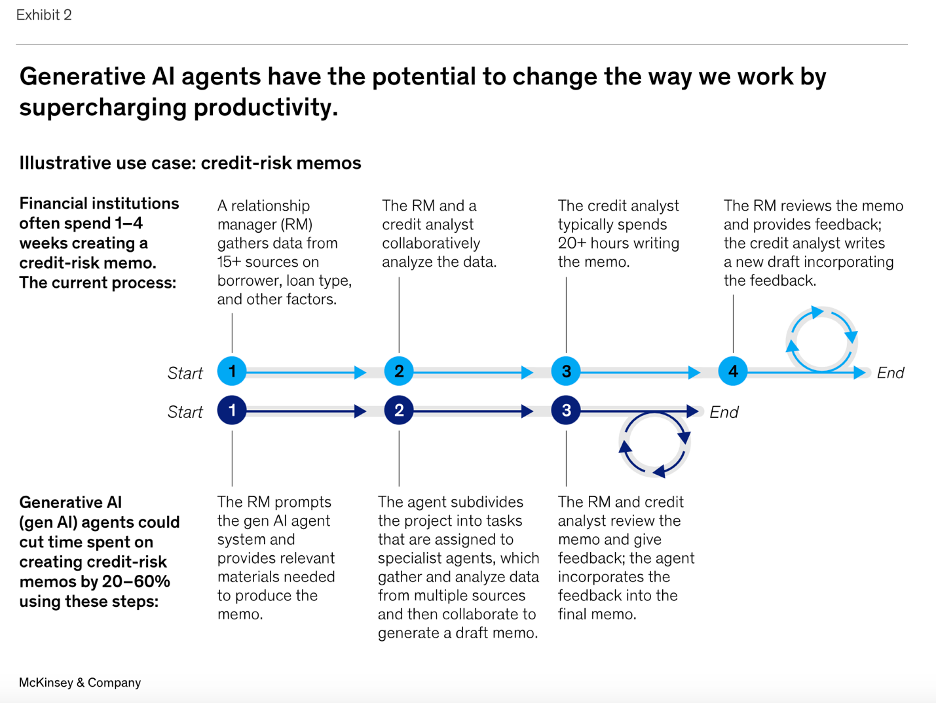

Use Case 1: Loan underwriting – generating credit risk memos

Exhibit 2 below illustrates the difference between the current credit risk memo generation process and one that utilizes agents to perform subtasks.

The authors claim that the agent architecture could cut time spent on credit-risk memos by 20-60%. Depending on the size of the bank, those efficiency percentages could represent significant savings.

Use case 2: Code documentation and modernization

There have been many articles touting the ability of Generative AI tools to write computer code at accuracy rates approaching a highly trained and experienced programmer. The authors note that legacy systems at large companies slow the rate of business innovation. However, modifying the legacy systems by reviewing and revising the system’s computer code can be complex, costly, and time-consuming.

According to the authors, agents could help streamline the process. Step 1 would use a specialized agent to analyze old code and document and translate code segments. Step 2 would use a quality assurance agent to critique the documentation and produce test cases, allowing the AI agent (Step 3) to iterate and refine its output to enhance accuracy. Building a framework like this could be useful for using in multiple software migration scenarios.

Use case 3: Online marketing campaign creation

Use case 3 was a surprise to me. I agree with the two introductory sentences:

- “Designing, launching, and running an online marketing campaign tends to involve an array of different software tools, applications, and platforms.

- [And] the workflow for an online marketing campaign is highly complex.

McKinsey’s proposed agent-based solution sounds like too much of a stretch for me based on today’s technology. Their proposed process sequences the following steps:

- A marketer could describe targeted users, initial ideas, intended channels, and other parameters in natural language.

- With assistance from marketing professionals, an agent system would help develop, test, and iterate different campaign ideas.

- A digital marketing strategy agent could tap online surveys, analytics from customer relationship management solutions, and other market research platforms aimed at gathering insights to craft strategies using multimodal foundation models.

- Agents for content marketing, copywriting, and design could then build tailored content, which a human evaluator would review for brand alignment.

- These agents would collaborate to iterate and refine outputs and align toward an approach that optimizes the campaign’s impact while minimizing brand risk.

I’m skeptical that an agent system could accomplish the tasks listed in this use case any time soon. I’m also skeptical that a corporation would spend the time and the money attempting to build a system of agents designed for this specific use case. Given the technology as it exists, I think it’s too soon.

How to Prepare for Using Agents

Even though the technology is admittedly in a nascent stage, the authors recommend that companies increasing investments in these tools could build agentic systems that can be deployed at scale over the next few years. Identifying potential use cases can incentivize organizations to explore tools and frameworks on Microsoft Autogen, Hugging Face, and LangChain to understand what is relevant.

There are three factors that organizations should consider for these agentic systems to perform to their potential:

- Codification of relevant knowledge: Implementing complex use cases will require organizations to define and document business processes into codified workflows that are used to train agents.

- Strategic tech planning: Organizations will need to organize their data and IT systems to ensure that agent systems can interface effectively with existing infrastructure.

- Human-in-the-loop control mechanisms: As agents begin interacting with systems and processes, control mechanisms are required to balance autonomy and risk. Humans must validate outputs for accuracy, compliance, and fairness; work with subject matter experts to maintain and scale agent systems; and create a learning flywheel for ongoing improvements.

The authors cite a recent McKinsey “State of AI” survey that indicates 72% of companies surveyed are implementing AI solutions. They recommend that companies consider agents in their planning processes and future AI road maps.

The Hugging Face Agentic Framework

Thanks to an introduction from a former colleague two years ago, I met Ernest Spicer and Tiff Reiss, co-founders of Sagax.ai. When they formed an advisory board for their company, I agreed to join them.

During a recent board call, I asked Ernest if he could explain the difference between the Gen AI tools used by Sagax and “agents.” Ernest led off by explaining that Sagax.ai embraces data science tools, not limited to generative AI. Ernest explained that Generative AI tools are not the easy agent solution as claimed by numerous companies. He referred me to a link to an agent framework developed and posted on Hugging Face.

The section titled “What are Agent frameworks and why they matter?” was enlightening. A definition was provided:

An Agent framework is a layer on top of an LLM to make said LLM execute actions (like browse the web or read PDF documents) and organize its operations in a series of steps.

The next section was titled “The GAIA benchmark.” It opens with the statement that GAIA is arguably the most comprehensive benchmark for agents. Its 450+ questions are very difficult and hit on many challenges of LLM-based systems. The questions are divided into three levels to indicate advancing stages of model capabilities.

According to the authors, GPT-4 doesn’t reach 7% on the validation set when used without any agentic setup. With Deep Research, OpenAI reached a 67.36% score on the validation set. The Hugging Face contributors built an open-source agent that has achieved a 55.15% performance on the validation set, but they acknowledge that was due to letting its agents write their actions in code instead of JSON, a language independent data format.

I like the Hugging Face definition of an agent framework. I also like the creation of a validation dataset that evaluates its performance. I don’t think the two can be separated. In fact, the Hugging Face collaborators may have to adjust its validation set as these tools become tougher to differentiate.

On February 21, Wharton Professor Ethan Mollick posted the following on LinkedIn:

The confusion in the marketplace over what “agents” are is even worse than the previous confusion over what “AI” is.

At least with “AI” there were some definitions we generally agreed on. Now everyone is just calling every piece of software agentic and there is no common understanding to fall back on.

Professor Mollick’s post generated nearly 800 likes, 149 comments, and 39 reposts at the time I penned this article Friday afternoon. There were many definitions posted by the commenters. I didn’t find any that I thought were better than the Hugging Face definition, but I found several that I thought were very clever. I liked how Deloitte differentiates between “agents” like chatbots and co-pilots and “agentic AI” that aligns more with completing complex tasks with minimal human intervention.

Stanford’s Storm’s Prompt Response About Agents

I have found Stanford’s Storm AI to be very thoughtful in its sourcing for articles. I logged in and submitted the following prompt:

Agents are mentioned in the more recent prognostications about future developments in Gen AI as a potential game-changer.

I received a thoughtful article, well organized, with many relevant sources. The content generated was organized in the following sections:

- Summary

- Historical Context

- Emergence of AI Agents

- Transformative Developments

- Current Landscape and Future Projections

- Characteristics of Gen AI Agents

- Autonomy

- Decision-Making Complexity

- Learning and Adaptation

- Accountability and Ethical Considerations

- Application Versatility

- Applications of Gen AI Agents

- Hyper-Personalization

- Self-Healing Systems

- Democratization of Development

- AI-Driven Software Development

- Healthcare Applications

- Enhanced Business Operations

- Security and Compliance Considerations

- Potential Impact on Society

- Economic Considerations

- Misinformation and Ethical Concerns

- Social Implications

- Challenges and Limitations

- Quality of Generated Outputs

- Control Over Generated Outputs

- Data Quality and Availability

- Ethical and Societal Considerations

- Regulatory Uncertainty

- Integration and Technical Hurdles

- Security Risks

- Frameworks and Guidelines for Ethical Deployment

- Ethical Governance

- Code of Conduct

- Comprehensive Policy Framework

- Transparency and User Consent

- Addressing Bias and Fairness

- Future Prospects

- Integration of Emerging Technologies

- Call to Action for Stakeholders

- Continuous Learning and Adaptability

- Regulation and Ethical Considerations

The summary is appended below. I added a hyperlink for each of the 17 numbered sources in the summary (there were 29 unique sources cited by Storm). I reviewed all of them and as mentioned in earlier articles, I found the sources used by Storm to be wide ranging, relevant, and recently published. A few were duplicative and several were likely not to be worthy to be a source for a graduate level paper. However, the output was completed much quicker than if I had researched and written a paper without its assistance. If you have an interest in clicking the hyperlinks, I particularly enjoyed reading [7}, [11}, [13], [15], and [17].

Storm’s Summary

Generative AI agents, often referred to as “agentic AI,” represent a significant advancement in the field of artificial intelligence (AI), characterized by their ability to operate autonomously and perform complex tasks without direct human oversight. These agents utilize large language models (LLMs) and advanced algorithms to reason through problems, adapt to new information, and execute plans, thereby transforming various sectors such as healthcare, finance, and customer service [1] [2] [3].

Their increasing capabilities have sparked considerable interest and investment, projecting the market for AI agents to grow from $5.1 billion in 2024 to an estimated $47.1 billion by 2030, highlighting their rising relevance in contemporary society [4] [5].

The emergence of generative AI agents is rooted in decades of AI research, evolving from simple rule-based systems to sophisticated, context-aware applications driven by machine learning and natural language processing technologies [1] [2]. This evolution has not only enhanced the efficiency of business operations but has also raised critical ethical questions regarding privacy, security, and accountability as these systems gain greater autonomy [6] [7]. Notably, concerns surrounding misinformation, algorithmic bias, and job displacement have become central topics of discussion, emphasizing the need for comprehensive regulatory frameworks to guide the responsible deployment of AI technologies [8] [9] [10].

As these AI agents become increasingly capable, they present both opportunities and challenges. On one hand, they promise improvements in productivity, customization, and operational resilience; on the other, they necessitate a reevaluation of societal norms related to employment, information dissemination, and ethical standards in technology use [11] [12] [13]. The interplay of these factors underscores the need for ongoing dialogue among stakeholders, including technologists, ethicists, and policymakers, to ensure that the advancements in generative AI serve the collective interests of society while mitigating potential harms [14] [15].

In conclusion, the rise of generative AI agents marks a transformative phase in the technological landscape, with implications that extend far beyond mere automation. Their impact on industry, governance, and daily life underscores the importance of addressing the multifaceted challenges they present, paving the way for a future where AI can be harnessed ethically and effectively for the benefit of all [16 ][17].

Final Thoughts

For those of us trying to keep up with the ongoing changes in AI, the fact that the Hugging Face community chose to post a definition of agent as recently as February 4, 2025, is indicative of the pace of developments and, as Ethan Mollick wrote, the difficulty in agreeing on a single definition of agentic agents..

At the same time, it’s interesting how the ongoing improvements in Gen AI performance (specifically OpenAI) appear to be due to the agent layer added to the core LLM. I was surprised at the gap between 7% achievement in the Hugging Face validation set for the OpenAI base and 67% for the Deep Research product.

With only sketchy published details, we can assume that OpenAI has spent billions to improve its original LLM and will spend billions more to improve the Deep Research capabilities (and score) on the validation set. None of the large tech companies that have developed LLMs are showing any sign of slowing their spending to improve their models.

With Deep Research achieving a 67% on the validation set, I believe enhancements will be necessary to measure the ability of an agent-aided LLM to accomplish the use cases proposed by McKinsey (and others).

Multi-task processes with thousands of scenarios and decisions required based on complex factors will be difficult to build even with a sophisticated LLM. I suspect that the simplest use cases will be the first to be implemented. Successful implementations will likely stimulate companies to attempt more complex solutions. Deloitte predicts that 25% of companies using generative AI will implement an agentic AI pilot in 2025, with that number increasing to 50% by 2027.

Assuming many of these “pilots” work as planned, I suspect that agentic AI will be implemented more quickly. At the same time, some of these companies could choose use cases that are too complex for the technology as it currently exists. Setbacks could dissuade leaders from future innovations. Lastly, I’m not sure that pricing for enterprise agentic AI solutions has settled out yet. That could influence future implementations as well.