Shortly after the announcement about ChatGPT’s open access, my emails and news feed notices about artificial intelligence (AI) increased. Some of the emails were solicitations for training, and others provided news about the large language model (LLM) technology, particularly about working with AI.

As a University of Pennsylvania graduate, I was already receiving Knowledge at Wharton, a business journal from the Wharton School of Business. One of Wharton’s professors, Ethan Mollick, became a more frequent contributor to the journal. I liked his articles so much that I subscribed to his Substack blog, One Useful Thing.

Earlier this year, Professor Mollick announced that he was writing a book about AI that would be published in April. I was able to pre-order a copy that arrived the first week of April. Co-Intelligence did not disappoint.

Technology of Large Language Models

Part One focuses on a discussion of the technology of large language models. In fact, Chapter One is titled Creating Alien Minds. With a short history lesson about technology beginning in the late 1700s and moving quickly to 2010, Mollick describes the evolution of AI over the last 14 years.

I was an active participant during the earlier years of the Big Data boom. Working with a colleague, Dr. Phil Ice, we experimented with neural network analysis to analyze large data sets. Unlike regression models, there was no algorithm to trace the steps of the analysis to determine explanations for the outcomes. It was unnerving at first.

Companies like Amazon were the biggest users of Big Data AI models at that time. They wove AI analysis into every component of their large and complicated supply chain. AI allowed Amazon to forecast product demand, organize and optimize its vast warehouses, and deliver the product to buyers. AI also powered Amazon’s warehouse robots.

Transformers

Mollick refers to the Google researchers’ white paper published in 2017 (titled Attention is All You Need) as the shift in how computers understand and process knowledge. The researchers proposed a new technology, the transformer, that enabled the computer to process human language.

The proposed transformer utilized an attention mechanism to concentrate on the most relevant parts of a text, making it easier for the AI to work with language that appeared to be more like a human. Transformers were incorporated in large language models to analyze a piece of text and predict the next word or part of a word, also referred to as a token.

Most of us are aware that LLMs are trained on a vast amount of text from sources like websites, books, and other digital documents. The AI learns to recognize patterns and context in human language. Using a huge number of adjustable parameters called weights, the LLM creates a model that allows it to communicate through written text.

Weights are calculated from the learning process an LLM goes through when it ingests the texts from its various sources. The weights inform the AI of the probability that a word or words will appear in a specific order. The original ChatGPT had 175 billion weights.

Building the weights requires powerful computer chips to handle the calculations required to analyze billions of words. Mollick notes that the most advanced LLMs cost more than $100 million to train.

Some Training Material May Bias the Outcomes

Mollick provides his readers with a few examples of text sources that are included in these large data sets. Copyrighted materials are included, some of which the model owner may have acquired without permission. He notes that the legal implications are still unclear.

The variety of the data sources used for building and training the LLM models means that the learning is not always perfect. AI can learn biases, errors, and falsehoods from the data and it may be accumulated and analyzed without ethical boundaries. Reinforcement Learning from Human Feedback (RLHF) is an approach to fine-tune these issues.

Diffusion Models

AI models designed to create images also appeared around the same time as LLMs like ChatGPT. Instead of learning by analyzing text, these models ingest millions of images paired with text captions describing the picture. Words are associated with visual concepts. The process of creating an image from a text description is called diffusion.

LLMs are also learning to work with images using extra technical components. The largest LLMs are acquiring multimodal analysis capabilities combining text and images.

Alignment of the AI Model

Mollick’s Chapter Two discusses the alignment problem caused by the fact that there is no reason for an AI to share humans’ views of ethics and morality. He addresses the concern that an AI can become more intelligent than a human or an artificial superintelligence (ASI).

A smarter-than-a-person AI can make computers and machines even smarter, accelerating the gap in intelligence more rapidly than humans can build them with their lesser intelligence. An ASI aligned with humans can use its intelligence to cure diseases and solve humanity’s major problems. Unaligned ASIs could choose to wipe out humans.

Researchers are evaluating ways to design AI systems aligned with human values and goals. More specifically, researchers want to make sure that AI does not harm humans. Mollick writes that some AI experts predict a 12 percent chance that an AI will kill at least 10 percent of humans by 2100, while other AI experts place that risk closer to two percent.

Mollick writes that “government regulation is likely to continue to lag the actual development of AI capabilities and might stifle positive innovation in an attempt to stop negative outcomes… Regulations are likely not going to be enough to mitigate the full risks associated with AI.”

Alignment of AI with humans will require citizens to push their governments to coordinate initiatives between corporations, governments, researchers, and wealthy individuals or non-nation entities. Ensuring the public is educated about AI is vital to successfully aligning AIs with human interests.

Four Rules for Co-Intelligence

Mollick believes that we (humans) need to establish a few ground rules to work successfully with Ais. He proposes four principles.

Principle 1: Always Invite AI to the table

It’s a simple premise. Mollick believes that we should try to invite AI to help us in everything that we do except for items barred legally or ethically. His explanation is simple as well. Familiarizing everyone with AI’s capabilities allows us to better understand how AI can assist or threaten us or our jobs.

People who “understand the nuances, limitations, and abilities of AI tools are uniquely positioned to unlock AI’s full innovative potential.” Anyone in a position to be a user innovator will frequently be the source of breakthrough ideas for new products and new services. Workers who “make AI useful for their jobs will have a large impact.”

Principle 2: Be the Human in the Loop

“To be the human in the loop, you will need to be able to check the AI for hallucinations and lies and be able to work with it without being taken in by it.”

Collaborating with AI by providing human perspectives, critical thinking skills, and ethical considerations keeps individuals engaged with the AI process and helps form a working co-intelligence with AI.

Active participants in the AI process can maintain control over the technology as well as its implications. This work ensures that AI solutions align with our values, ethics, and societal norms. It also provides individuals with a head start at adapting to changes before people who do not work with AI realize that change is coming.

Principle 3: Treat AI Like a Person (but Tell It What Kind of Person It Is)

Mollick writes that “anthropomorphism is the act of ascribing human characteristics to something that is nonhuman.” Many researchers worry that “treating AI like a person can create unrealistic expectations, false trust, or unwarranted fear among the public, policymakers, and even researchers.”

Despite these warnings, Mollick writes that he believes it is easier to work with AI “if you think of it like an alien person rather than a human-built machine.” To maximize the relationship, he advises to “establish a clear and specific AI persona, defining who the AI is and what problems it should tackle.

Principle 4: Assume This Is the Worst AI You Will Ever Use

Given the progress of LLM development in 2023, Mollick predicts that bigger, smarter, and better models are coming. He writes that LLMs will be integrated with email, web browsers, and other common tools. (Note: I added the Copilot AI tool to my Microsoft Office 365 suite after reading the book.)

Mollick writes, “As AI becomes increasingly capable of performing tasks once thought to be exclusively human, we’ll need to grapple with the awe and excitement of living with increasingly powerful alien co-intelligences—and the anxiety and loss they’ll also cause.”

Those of us who embrace this principle will be able to adapt to change and remain competitive in a workplace driven by exponential advances in AI.

Part II: The Many Roles of AI

After providing an excellent primer on the advances in current AI technologies in Part I, Professor Mollick discusses the major roles that AI can play in six chapters in Part II.

AI as a Person

Mollick recommends treating AI like a human because “in many ways, it behaves like one.” AI can write, analyze, code, and chat. However, it struggles with tasks that machines typically excel at, like repeating a process consistently and performing complex calculations without assistance.

Companies will begin to develop AIs built to optimize engagement with humans in the same way that social media sites are programmed to increase the time that you spend on your favorite site.

“If we remember that AI is not human, but often works in the way that we would expect humans to act, it helps us avoid getting too bogged down in arguments about ill-defined concepts like sentience.”

AI as a Creative

“Researchers have argued,” writes Mollick, “that it will be the jobs with the most creative tasks that tend to be most impacted by the new wave of AI.”

LLMs are trained by generating relationships between tokens that may seem unrelated to humans but represent a deeper meaning.

There are several psychological tests of creativity. Mollick writes that many Ais have already achieved higher creativity scores on these than humans. However, without careful prompting, the AI tends to select similar ideas every time. A large group of creative people will usually generate a wider diversity of ideas than the AI.

With proper prompting to force the AI to give less popular answers, you can force it to find more original ideas. Humans in the loop can filter the multiple answers to find the best.

“Writers are often the best at prompting AI for written material because they are skilled at describing the effects they want prose to create.”

AI as a Coworker

Mollick writes that at least four different research teams have tried to quantify how much overlap there is between jobs that humans can do and jobs that AI can do. He notes that each study has concluded that almost all our jobs will overlap with AI capabilities.

While college professors make up most of the top 20 jobs that overlap with AI, the job with the highest overlap is a telemarketer. Only 36 out of 1,016 job categories had no overlap. These jobs included dancers, athletes, roofers, and motorcycle mechanics. They are highly physical jobs.

Mollick states that just because there are overlaps, it doesn’t mean that AI will replace a person. Instead, he suggests that people welcome AI as a tool to automate mundane tasks freeing us up for tasks that require creativity and critical thinking.

Professor Mollick suggests grouping tasks into Tasks for Me, Tasks for AI, Delegated Tasks, and Automated Tasks. He writes that a valuable way to consider how to use AI at work is to look at two approaches in integrating the concept of co-intelligence. He calls this becoming a Centaur or a Cyborg.

Centaur work has a clear line between human and machine work. Cyborgs blend machine and person. Cyborgs don’t just delegate, they integrate their efforts with AI. Using AI to read papers is a Centaur task, allowing the AI to summarize the paper and the human to comprehend it.

Mollick writes, “The best way for an organization to benefit from AI is to get the help of its most advanced users while encouraging more workers to use AI.” He adds that evidence supports that workers with the lowest skills benefit the most from AI.

AI has the potential to be a great leveler, turning everyone into an excellent worker. In this case, education and skill will become less valuable. Mass unemployment or underemployment becomes more likely, with lower-cost workers doing the same work in less time.

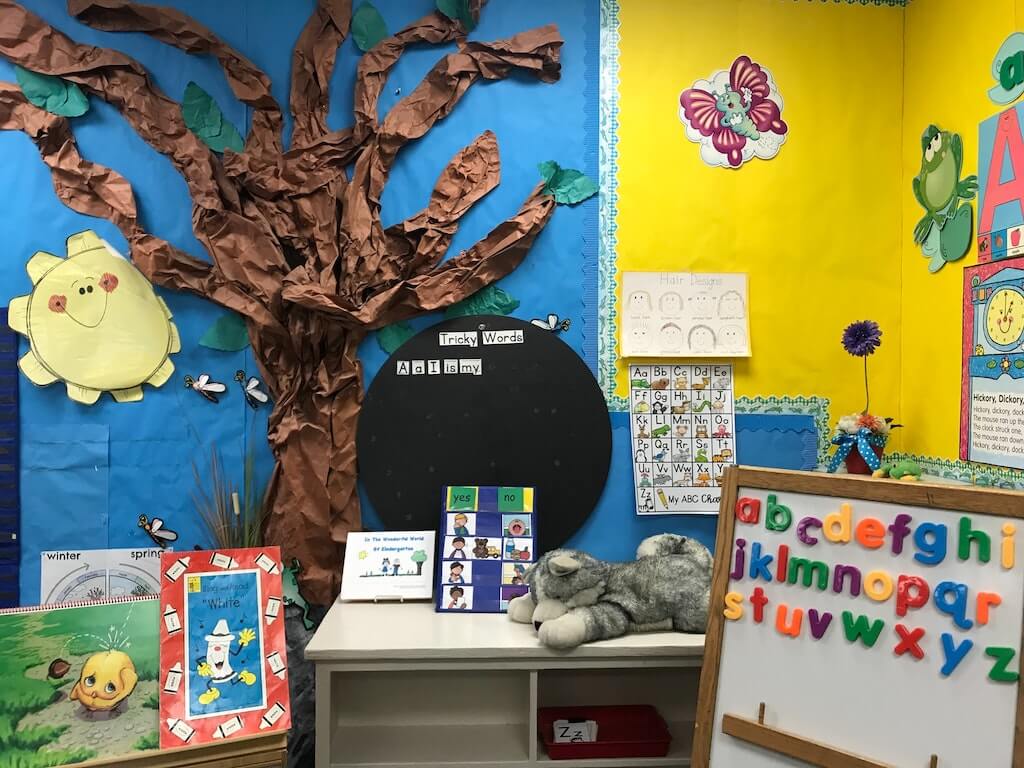

AI as a Tutor

Based on his personal experiences as an educator, Mollick writes that the first impact of LLMs at scale was to blow up the impact of homework assignments. With AI, cheating is trivial. AI is good at summarizing and applying information. Writing essays is its strong point, and there is no way to detect whether a piece of text is AI-generated.

Mollick compares the fears of teachers about allowing students to use AI as unfounded as their fears were about students using calculators in classrooms. Like calculators, there will be assignments where AI assistance is necessary and those where AI usage is not allowed. AI will not replace the need for learning to write and think critically.

Professor Mollick has made AI mandatory in all his classes at Wharton. He believes that AI provides the chance to generate new approaches to pedagogy that push students in ambitious ways. He provides several useful examples.

Students are using AI as a learning tool. Educators are using AI to prep for class. “The ability to unleash talent and to make schooling better for everyone from students to teachers to parents, is incredibly exciting.” The only question is whether we expand opportunity for everyone.

AI as a Coach

Perhaps the most surprising statement by Mollick is at the beginning of Chapter Eight, where he writes, “The biggest danger to our educational system posted by AI is not its destruction of homework, but rather its undermining of the hidden system of apprenticeship that comes after formal education.”

Mollick adds that for most professionals, leaving school for the workforce marks the beginning of their practical education. People have gained expertise by starting at the bottom. Amateurs become experts by learning from more experienced experts and trying and failing under their supervision. AI is likely to upset that relationship.

Expertise requires a base of knowledge. The working memory of humans has strengths such as the ability to recall an unlimited number of facts and procedures from long-term memory when it’s needed for problem-solving. We must learn facts and understand how they are connected. We become experts through deliberate practice.

Deliberate practice “requires a coach, teacher, or mentor who can provide feedback and careful instruction and push the learner outside their comfort zone. Today’s AI is not able to connect complex concepts and hallucinates too much. It can, however, offer encouragement, instruction, and some elements of deliberate practice.

Not everyone can become an expert in everything. Mollick writes that in field after field, researchers are finding that a human working with an AI co-intelligence outperforms all but the best humans working without an AI. He doesn’t think that will result in the death of expertise. It will allow workers to focus on a narrower area of expertise.

There may be a role for humans who are experts at working with AI in specific fields. People will need to build their own expertise as human experts. This will require that they continue to learn how to read, write, know and understand history, and all the other foundational skills required. AI will become our co-intelligence, pushing us to become better.

AI as Our Future

In his final chapter, Professor Mollick writes that there are four possible futures for a world with AI. The implications for each are not so clear.

Scenario 1: As Good as It Gets

Professor Mollick writes that the most unlikely scenario is that AI has already reached its limits. He writes that there is little evidence that this is the case. It is more likely that regulatory or legal action will stop future AI development. He believes that this is the scenario that most people are planning for.

“Assuming no further improvement in LLMs, AIs will have a large impact on the tasks of many workers, especially those in highly paid creative and analytical work.”

If AI does not advance any further, it will likely operate as a complement to humans rather than replacing them.

Scenario 2: Slow Growth

While AI’s growth has been rapid, Mollick writes that most growth in technology eventually slows down. Slower improvement would still represent an impressive rate of change. Everything that happens in Scenario 1 still occurs, but as time goes on, AI will increase its ability to do more complex work.

Work will continue to be transformed with annual improvements in the capabilities of AI models. Each year, more industries will be impacted.

Societal benefits will begin to appear. Advances in AI will curb the current slide in innovation and lead to breakthroughs in how we understand the universe, increase productivity growth, and advance educational opportunities for people around the world.

Scenario 3: Exponential Growth

Moore’s Law has been true for 50 years. AI might continue to accelerate in this way by using AI systems to create the next generation of AI software. Risks will be more severe and less predictable. Every computer system will be vulnerable to AI hacking. AI-powered influence campaigns will be everywhere. Governments will not have time to adjust.

AI companions will become more compelling. Loneliness will be less of an issue, but new forms of social isolation will emerge. AI will be able to unlock human potential. Entrepreneurship and innovation will flourish.

AIs 100 times better than ChatGPT-4 will start to take over human work. Shortened work weeks, universal basic income, and other policy changes might become a reality as the need for human work decreases.

Scenario 4: The Machine God

Mollick writes that in this scenario, machines reach artificial general intelligence (AGI), and human supremacy ends. No one knows what will happen. He believes that too much consideration of this scenario makes us feel powerless. Instead of worrying about the AI apocalypse, we should worry about the many small catastrophes that AI can impact.

AI does not need to be catastrophic. It can be empowering. Students who were left behind can find new paths forward. Workers stuck in tedious or useless work can become productive. Many people will shape what AI means for their customers, students, and their environment. To make these choices matter, serious discussions need to begin now.

Epilogue: AI as Us

AIs are trained on our cultural history, and reinforcement learning from our data aligns them with our goals. Mollick concludes by writing, “AI is a mirror, reflecting back at us our best and worst qualities. We are going to decide on its implications, and those choices will shape what AI actually does for, and to, humanity.”

A Few More Thoughts

I enjoy reading Ethan Mollick’s weekly blog, so my expectations for this book were extremely high. It did not disappoint. I think he did an excellent job of preparing the background of the evolution of the technology to take the reader through the various roles that AI is already capable of performing.

There are many great examples that I did not include in this overview. For that reason, if you have not yet ordered this book, I hope that you do. The scenario planning at the conclusion of the book is not too dissimilar to the risks outlined in Mustafa Suleyman’s book, The Coming Wave.

If there’s a single piece of advice I would recommend everyone implement tomorrow, it’s Principle 1: Always invite AI to the table.

I signed up for the premium version of ChatGPT very early. I try to use it for 15-30 minutes every weekday. After reading Principle 1, I signed up for Copilot for Office 365 after procrastinating. I used it to review this article.

The education field is awash with critics of AI (“the sky is falling”), proponents of AI (many building courses, writing books and papers, leading seminars and conferences), and those who are too overwhelmed to know where to begin. It’s time for all of us to embrace the fact that it’s here to stay (remember Mollick’s calculator example) and use it to enhance our teaching.

Buy Co-Intelligence. If you like it, buy several copies for fellow educators, administrators, and board members. Follow Principle 1 and use it before you are left behind.